Appearance

InfiniBand

About InfiniBand

InfiniBand is an industry-standard specification that defines an input/output architecture used to interconnect servers, communications infrastructure equipment, storage and embedded systems.

A true fabric architecture, InfiniBand leverages switched, point-to-point channels with data transfers that generally lead the industry, both in chassis backplane applications as well as through external copper and optical fiber connections. Reliable messaging (send/receive) and memory manipulation semantics (RDMA) without software intervention in the data movement path ensure the lowest latency and highest application performance.

This low-latency, high-bandwidth interconnect requires only minimal processing overhead and is ideal to carry multiple traffic types (clustering, communications, storage, management) over a single connection. As a mature and field-proven technology, InfiniBand is used in thousands of data centers for both HPC and AI clusters that efficiently scale up to thousands of nodes. This scalability is crucial for frameworks that require distributed processing across multiple compute nodes to handle complex and computationally intensive tasks. Through the availability of long reach InfiniBand over Metro and WAN technologies, InfiniBand is able to extend RDMA performance between data centers, across campus, to around the globe.

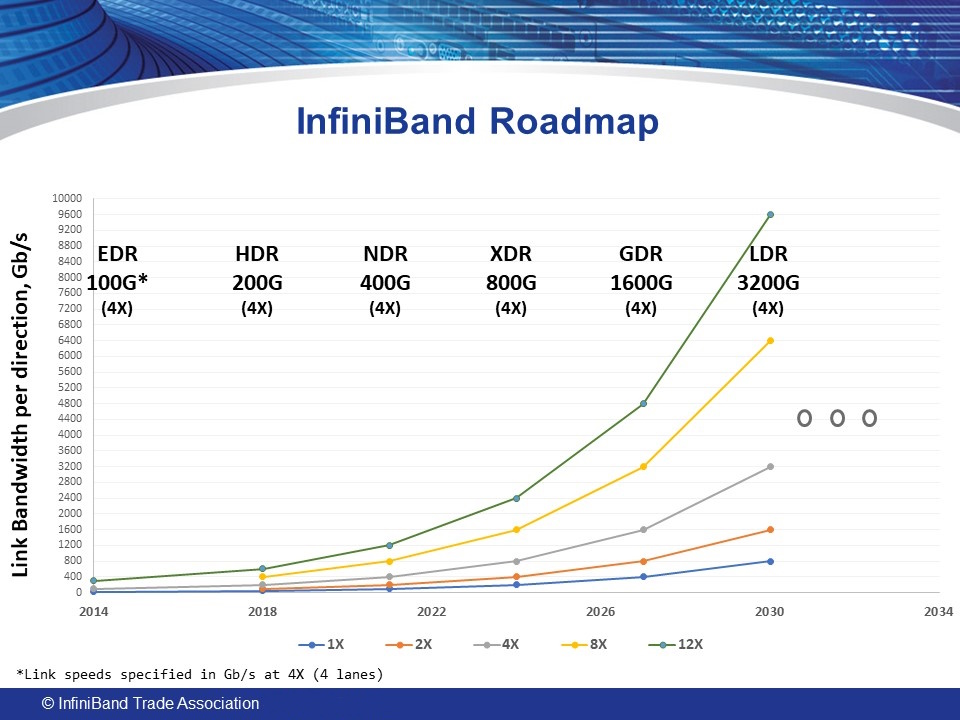

NDR 400Gb/s InfiniBand is shipping today, and has a robust roadmap defining increased speeds well into the future. The current roadmap shows a projected demand for higher bandwidth with GDR 1.6Tb/s InfiniBand products planned for 2028 timeframe.

RDMA = Remote Direct Memory Access

InfiniBand Roadmap

The InfiniBand roadmap details 1x, 2x, 4x, and 12x port widths. The roadmap is intended to keep the rate of InfiniBand performance increase in line with systems-level performance gains.