Appearance

The bfloat16 numerical format

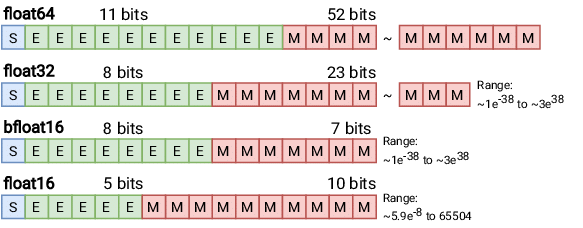

bfloat16 is a custom 16-bit floating point format for machine learning that is composed of:

- one sign bit,

- eight exponent bits, and

- seven mantissa bits.

The following diagram shows the internals of four floating point formats:

- float64

- float32

- bfloat16

- float16

The dynamic range of bfloat16 and float32 are equivalent. However, bfloat16 takes up half the memory space.